SELECT Statement Syntax:

SELECT 语句语法:

1 | SELECT [ALL | DISTINCT] select_expr, select_expr, ... |

ALL and DISTINCT clauses

ALL 和 DISTINCT 子句

ALL and DISTINCT options specify whether duplicate rows are returned.

ALL 和 DISTINCT 选项指定是否返回重复的行。

If not specified, the default is ALL (all rows are returned).

如果未指定,默认值为 ALL(返回所有行)。

DISTINCT specifies that duplicate rows are removed from the result set.

DISTINCT 指定从结果集中移除重复的行。

Group by

Group by(分组)

Group by is used with aggregate functions to group results by one or more columns.

Group by 与聚合函数一起使用,按一列或多列对结果进行分组。

The GROUP BY clause is used to group all the records in a result set using a particular collection column.

GROUP BY 子句用于使用特定的集合列对结果集中的所有记录进行分组。

It is used to query a group of records.

它用于查询一组记录。

HAVING clause

HAVING 子句

It’s similar to WHERE clause, we use it with GROUP BY clause for filtering the data.

它类似于 WHERE 子句,我们将其与 GROUP BY 子句一起使用以过滤数据。

Limit

Limit(限制)

The results of a typical query can return a large number of rows.

典型查询的结果可能会返回大量的行。

The LIMIT clause puts an upper limit on the number of rows returned:

LIMIT 子句对返回的行数设定了上限:

Like and RLike

Like 和 RLike

Like is a standard SQL statement.

Like 是标准的 SQL 语句。

RLike is an extension of Hive. You can specify matching conditions through java regular expressions.

RLike 是 Hive 的扩展。你可以通过 Java 正则表达式指定匹配条件。

LIKE is an operator similar to LIKE in SQL. We use LIKE to search for string with similar text.

LIKE 是一个类似于 SQL 中 LIKE 的运算符。我们使用 LIKE 来搜索具有相似文本的字符串。

RLIKE (Right-Like) is a special function in Hive where if any substring of A matches with B then it evaluates to true. It also obeys Java regular expression pattern.

RLIKE(Right-Like)是 Hive 中的一个特殊函数,如果 A 的任何子字符串与 B 匹配,则其计算结果为真。它也遵循 Java 正则表达式模式。

Users don’t need to put % symbol for a simple match in RLIKE.

用户不需要在 RLIKE 中为简单匹配放置 % 符号。

Hive Subqueries

Hive 子查询

A Query present within a Query is known as a sub query. The main query will depend on the values returned by the subqueries.

存在于查询中的查询称为子查询。主查询将取决于子查询返回的值。

Subqueries can be classified into two types:

子查询可以分为两类:

Subqueries in FROM clause

FROM 子句中的子查询

SELECT <column names 1, 2…n> From (SubQuery)

SELECT <列名 1, 2…n> From (子查询) <主表名>

1 | SELECT col1 FROM (SELECT a+b AS col1 FROM t1) t2 |

Subqueries in WHERE clause

WHERE 子句中的子查询

SELECT <column names 1, 2…n> From

SELECT <列名 1, 2…n> From <主表名> WHERE col1 IN (子查询);

1 | SELECT name,age FROM emp WHERE emp.emp_id IN (SELECT id FROM dept); |

Joins in Hive

Hive 中的连接(Joins)

A join in Hive is used for the same purpose as in a traditional RDBMS.

Hive 中的连接用于与传统关系型数据库(RDBMS)相同的目的。

A join is used to fetch meaningful data from two or more tables based on a common value or field. In other words, a join is used to combine data from multiple tables.

连接用于基于共同的值或字段从两个或多个表中获取有意义的数据。换句话说,连接用于组合来自多个表的数据。

A join is performed whenever multiple tables are specified inside the FROM clause.

每当在 FROM 子句中指定多个表时,就会执行连接。

The Hive syntax is as follows:

Hive 语法如下:

1 | SELECT table_fields |

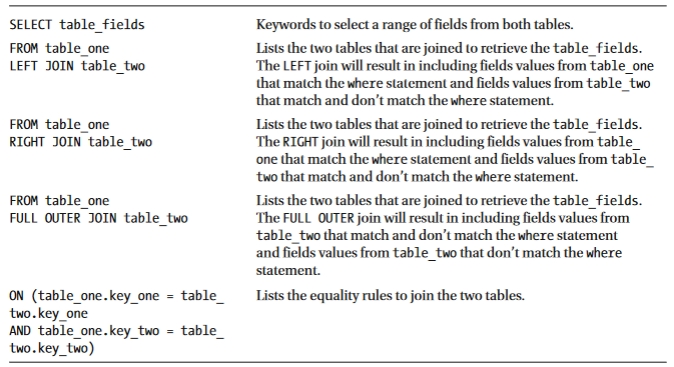

Using Outer Joins

使用外连接(Outer Joins)

Hive supports equality joins between tables using LEFT, RIGHT, and FULL OUTER joins, where keys have no match.

Hive 支持使用 LEFT(左)、RIGHT(右)和 FULL OUTER(全外)连接在表之间进行等值连接,处理键不匹配的情况。

The Hive syntax is as follows:

Hive 语法如下:

1 | SELECT table_fields |

Using Largest Table Last

最后使用最大的表

Hive performs joins by buffering the first tables of the join and then mapping the last table against them.

Hive 通过缓冲连接中的前几个表,然后将最后一个表映射到它们来执行连接。

It’s good practice to always list the biggest table last because this speeds up the processing.

始终将最大的表列在最后是一种很好的做法,因为这样可以加快处理速度。

ORDER BY (ASC|DESC)

ORDER BY (ASC|DESC)(排序)

This is similar to the RDBMS ORDER BY statement.

这类似于 RDBMS 的 ORDER BY 语句。

A sorted order is maintained across all of the output from every reducer.

在每个 reducer 的所有输出中都保持排序顺序。

It performs the global sort using only one reducer, so it takes a longer time to return the result.

它仅使用一个 reducer 执行全局排序,因此返回结果需要更长的时间。

Usage with LIMIT is strongly recommended for ORDER BY.

强烈建议将 ORDER BY 与 LIMIT 一起使用。

SORT BY (ASC|DESC)

SORT BY (ASC|DESC)(局部排序)

This indicates which columns to sort when ordering the reducer input records.

这指示在对 reducer 输入记录进行排序时要对哪些列进行排序。

This means it completes sorting before sending data to the reducer.

这意味着它在将数据发送到 reducer 之前完成排序。

The SORT BY statement does not perform a global sort and only makes sure data is locally sorted in each reducer unless we set mapred.reduce.tasks=1.

SORT BY 语句不执行全局排序,只确保数据在每个 reducer 中局部排序,除非我们设置 mapred.reduce.tasks=1。

In this case, it is equal to the result of ORDER BY.

在这种情况下,它等同于 ORDER BY 的结果。

Use more than 1 reducer

使用超过 1 个 reducer

1 | SET mapred.reduce.tasks = n; // n=2, 3… |

Use only 1 reducer

仅使用 1 个 reducer

1 | SET mapred.reduce.tasks = 1; |

DISTRIBUTE BY

DISTRIBUTE BY(按…分发)

Rows with matching column values will be partitioned to the same reducer. When used alone, it does not guarantee sorted input to the reducer.

具有匹配列值的行将被分区到同一个 reducer。当单独使用时,它不保证 reducer 的输入是排序的。

1 | SELECT name, emp_id FROM emp DISTRIBUTE BY emp_id; |

Used with SORT BY

与 SORT BY 一起使用

1 | SELECT name, emp_id FROM emp DISTRIBUTE BY emp_id SORT BY name; |

CLUSTER BY

CLUSTER BY(聚类/分簇)

This is a shorthand operator to perform DISTRIBUTE BY and SORT BY operations on the same group of columns.

这是在同一组列上执行 DISTRIBUTE BY 和 SORT BY 操作的简写运算符。

And, it is sorted locally in each reducer.

并且,它在每个 reducer 中进行局部排序。

This can be used as follows:

用法如下:

1 | SELECT name, emp_id FROM emp CLUSTER BY name; |

Hive Views

Hive 视图

Basically, Apache Hive View is similar to Hive tables, that are generated on the basis of requirements. As a Hive view, we can save any result set data. Well, we can say its usage is as same as the use of views in SQL. Although, we can perform all type of DML operations on Hive views.

基本上,Apache Hive 视图类似于 Hive 表,它是根据需求生成的。作为一个 Hive 视图,我们可以保存任何结果集数据。嗯,我们可以说它的用法与 SQL 中视图的用法相同。虽然,我们可以在 Hive 视图上执行所有类型的 DML 操作。

1 | create view <viewname> as select |

Skipping Header and Footer records while loading in table

在加载表时跳过页眉和页脚记录

In many of the source files we have observed some lines present on the top (header) and some lines at the bottom (footer), which are not actual data but contains so information about the data or the file.

在许多源文件中,我们观察到顶部(页眉)和底部(页脚)存在一些行,它们不是实际数据,而是包含有关数据或文件的一些信息。

So we can either go to file and manually delete these header and footer lines, before creating table on it, which is not recommended.

所以我们要么在创建表之前去文件手动删除这些页眉和页脚行,这是不推荐的。

Or we can skip these header and footer lines while creating table using table properties.

或者我们可以在创建表时使用表属性跳过这些页眉和页脚行。

We created table mentioning property “skip.header.line.count”=”2”, since we want to skip the top 2 lines from the file and “skip.footer.line.count”=”2”, since we want to skip bottom 2 lines from the file.

我们创建表时提到了属性 “skip.header.line.count”=”2”,因为我们想跳过文件的前 2 行,以及 “skip.footer.line.count”=”2”,因为我们想跳过文件的最后 2 行。

1 | create table if not exists Tb_Employee |

Immutable and Mutable table

不可变和可变表

Mutable Table : All the tables by default are mutable.

可变表:默认情况下,所有表都是可变的。

Mutable table allows to append the data when data already present in table.

可变表允许在表中已有数据时追加数据。

Immutable Table: A Table can be created as immutable table, by setting its table property to True.

不可变表:可以通过将其表属性设置为 True 来创建不可变表。

By default, this property is false.

默认情况下,此属性为 false。

1 | create table if not exists EMP1 |

Immutable property allows us to load the data for the first time. After the first data insert, the successive insert into commands will fail resulting in only one set of data into table.

不可变属性允许我们首次加载数据。第一次插入数据后,后续的 insert into 命令将失败,导致表中只有一组数据。

Insert into command on Non-Empty Immutable Table: If we will try to use insert into command on Immutable Hive table then we will get error saying, inserting into non-empty immutable table is not allowed.

非空不可变表上的 Insert into 命令:如果我们尝试在不可变 Hive 表上使用 insert into 命令,我们会收到错误消息,提示不允许插入非空不可变表。

Insert Overwrite command on Non-Empty Immutable Table: All the previous data from Immutable table will be removed and new data will be inserted.

非空不可变表上的 Insert Overwrite 命令:不可变表中的所有先前数据都将被删除,并将插入新数据。

Hive Null Format property

Hive Null 格式属性

This property allows the given value passed to this property, to be treated as null value.

此属性允许将传递给此属性的给定值视为 null 值。

Let’s see an example.

让我们看一个例子。

Below File to be used as source, contains 3 columns, but there are 3 rows (2nd, 3rd and 5th row) in which value of 2nd column is missing.

下面用作源的文件包含 3 列,但有 3 行(第 2、3 和 5 行)缺少第 2 列的值。

Like in 2nd row, after value 30, 2nd column value is missing.

就像在第 2 行中,在值 30 之后,缺少第 2 列的值。

1 | 20,20-April-2021,Shanghai |

Now if we try to load this file into a hive table having 3 columns, hive will obviously load this file successfully, but if we try to capture the data from table having 2nd column value as NULL, it would return zero rows. Because by default nothing is null between the delimiters. Everything is data for Hive between the delimiters. Hive won’t treat a missing value as NULL value. It will be normal value for Hive, even if it is empty.

现在,如果我们尝试将此文件加载到具有 3 列的 hive 表中,hive 显然会成功加载此文件,但是如果我们尝试从表中获取第 2 列值为 NULL 的数据,它将返回零行。因为默认情况下,分隔符之间没有任何内容是 null。分隔符之间的所有内容对 Hive 来说都是数据。Hive 不会将缺失值视为 NULL 值。即使它是空的,对于 Hive 来说它也是正常值。

1 | create table if not exists DEPT_DET |

Data in the table without table Null format property

没有表 Null 格式属性的表中的数据

1 | select * from dept_det; |

Data in the table with table Null format property

具有表 Null 格式属性的表中的数据

Let’s create another table – We have used table property “serialization.null.format” is equals to “” (empty) as we want to replace empty fields with null.

让我们创建另一个表 – 我们使用了表属性 “serialization.null.format” 等于 “”(空),因为我们想用 null 替换空字段。

In case we want to replace spaces with null we would have to change the value (after equal to sign) while setting table property, so that it gets treated as null.

如果我们想用 null 替换空格,我们在设置表属性时必须更改(等号后的)值,以便将其视为 null。

1 | create table if not exists DEPT_DET1 |